Your cart is currently empty!

Category: SayPro Human Capital Works

SayPro is a Global Solutions Provider working with Individuals, Governments, Corporate Businesses, Municipalities, International Institutions. SayPro works across various Industries, Sectors providing wide range of solutions.

Email: info@saypro.online Call/WhatsApp: Use Chat Button 👇

-

SayPro Stakeholder feedback and engagement data

SayPro Stakeholder Feedback and Engagement Data

Stakeholder feedback and engagement are essential components for understanding the effectiveness of a program. It helps identify areas for improvement, measure satisfaction, and ensure that the program is meeting the needs of its stakeholders. The following sections outline the types of data that can be collected from stakeholders and methods for analyzing this feedback to improve SayPro’s program outcomes.

1. Stakeholder Satisfaction Survey

Definition: A tool used to gather quantitative and qualitative feedback from various stakeholders (e.g., beneficiaries, program staff, partners, and external collaborators) to assess their satisfaction with the program.

Key Data Points:

- Satisfaction with Program Objectives: How well the program is meeting its goals.

- Satisfaction with Program Delivery: Effectiveness of training, workshops, and other activities.

- Satisfaction with Communication: How well stakeholders feel informed about the program’s progress and goals.

- Satisfaction with Support: Whether stakeholders have the resources and support they need to succeed in the program.

Stakeholder Satisfaction Survey Data Example

Question Strongly Agree Agree Neutral Disagree Strongly Disagree Average Rating (1-5) “The program met my expectations.” 40% 45% 10% 3% 2% 4.3 “The training materials were relevant and helpful.” 50% 30% 10% 7% 3% 4.1 “The communication about program updates was clear and timely.” 45% 40% 8% 5% 2% 4.2 “I felt well supported by the program staff.” 35% 40% 15% 5% 5% 4.0 “The program helped me gain new skills for my professional growth.” 60% 25% 10% 3% 2% 4.5 Analysis: The survey results show strong satisfaction with the overall program, particularly in terms of meeting expectations and helping participants gain new skills. Areas for improvement include clearer communication and additional support for some participants.

2. Stakeholder Focus Group Feedback

Definition: In-depth discussions with a small group of stakeholders to gather qualitative insights into their experiences, challenges, and recommendations for improvement.

Key Data Points:

- Program Impact: Stakeholders’ perceived outcomes of the program, such as skills learned or professional benefits.

- Challenges: Specific obstacles or difficulties faced during program implementation.

- Suggestions for Improvement: Ideas on how the program could be improved to better meet stakeholder needs.

- Program Delivery: Stakeholders’ views on the delivery methods (e.g., online vs. in-person training) and their effectiveness.

Focus Group Feedback Example

Topic: Training Materials and Delivery

- Feedback: Stakeholders noted that while the training materials were valuable, some content could be more interactive. They suggested incorporating more case studies and real-life examples.

- Suggestions: Use a blended learning approach (mix of online learning, hands-on workshops) to engage participants better and offer flexible options for learning.

Topic: Job Placement Assistance

- Feedback: Several beneficiaries mentioned the need for more personalized job placement support, such as one-on-one coaching and tailored job recommendations.

- Suggestions: Strengthen relationships with local employers and create a dedicated team for job placement services.

Topic: Communication Channels

- Feedback: Stakeholders expressed a need for better communication about program timelines and changes in schedules.

- Suggestions: Use a program app or centralized portal to send updates and keep stakeholders informed about the latest news and announcements.

Analysis: Focus group feedback provides rich, actionable insights. For instance, moving towards blended learning and enhancing job placement services are two key areas to address in future iterations of the program.

3. Stakeholder Engagement Metrics

Definition: Quantitative data on the level of engagement of stakeholders with the program’s activities, including attendance at meetings, participation in surveys, and interactions with program materials.

Key Data Points:

- Event Attendance Rate: The percentage of stakeholders attending key events like workshops, training sessions, or review meetings.

- Survey Response Rate: The percentage of stakeholders who complete and submit surveys.

- Content Interaction Rate: The number of stakeholders engaging with program materials, whether through online platforms, printed resources, or other media.

Stakeholder Engagement Metrics Data Example

Activity Total Invitations Total Participants Engagement Rate (%) Comments Training Session 1 150 120 80% Good attendance, slight drop-off in remote areas Mid-Term Feedback Survey 120 100 83% High response rate, clear stakeholder interest Online Learning Module Completion 200 150 75% Moderate completion rate, needs more reminders Stakeholder Review Meeting 60 45 75% Engaged participants, more follow-up required Analysis: High engagement rates for surveys and training sessions indicate strong stakeholder interest. However, engagement with online learning modules could be improved, suggesting the need for more targeted outreach or better reminders.

4. Beneficiary Feedback on Program Effectiveness

Definition: Feedback from program participants (beneficiaries) regarding their experience, focusing on program effectiveness, skill development, and overall impact.

Key Data Points:

- Skills Gained: Whether beneficiaries feel they have acquired new skills that are relevant to their personal or professional development.

- Program Relevance: Whether beneficiaries feel the program addressed their needs and expectations.

- Program Impact: The perceived long-term effects of the program, such as employment success or increased confidence.

Beneficiary Feedback Data Example

Question Yes No Not Sure Comments “Has the program helped you gain new skills?” 85% 10% 5% Majority feel more confident in their skills “Did the program address your personal or professional needs?” 75% 20% 5% Participants felt the program was relevant “Have you secured a job since completing the program?” 60% 30% 10% Many secured employment, others still in search Analysis: The data suggests that a large percentage of beneficiaries feel they have gained new skills and that the program met their needs. However, there may be opportunities for expanding job placement efforts, as 30% have not yet secured employment.

5. Partner Feedback and Collaboration Effectiveness

Definition: Feedback from program partners (e.g., employers, training organizations, or community stakeholders) about the effectiveness of collaboration, resource sharing, and achieving mutual goals.

Key Data Points:

- Partnership Satisfaction: Satisfaction with the partnership, communication, and overall experience working with SayPro.

- Collaboration Efficiency: How efficiently partners feel resources, information, and tasks are shared.

- Impact on Shared Goals: The degree to which partners feel the program has helped achieve shared objectives (e.g., increased employment, community development).

Partner Feedback Data Example

Question Very Satisfied Satisfied Neutral Dissatisfied Very Dissatisfied Average Satisfaction Rating “We are satisfied with the communication and coordination.” 40% 50% 10% 0% 0% 4.4/5 “The program has helped us achieve our partnership goals.” 35% 45% 15% 5% 0% 4.2/5 “We would be interested in continuing or expanding our partnership.” 55% 35% 5% 5% 0% 4.6/5 Analysis: Partner feedback indicates high satisfaction with communication and goal achievement. The willingness to expand partnerships reflects strong program collaboration and effective delivery.

Conclusion

Collecting and analyzing SayPro Stakeholder Feedback and Engagement Data enables the program team to gauge stakeholder satisfaction, identify areas for improvement, and ensure that the program is aligned with the needs of all involved parties. By tracking satisfaction, engagement, and outcomes, SayPro can continue to improve its programs and better meet the expectations of its stakeholders.

-

SayPro Data on KPIs and performance indicators

SayPro Data on KPIs and Performance Indicators

Key Performance Indicators (KPIs) and performance indicators (PIs) are essential tools for measuring the success and effectiveness of programs. These metrics help track progress against objectives, ensure alignment with program goals, and identify areas for improvement. Below is a breakdown of common KPIs and performance indicators that SayPro might track, including explanations for each and how to collect and analyze relevant data.

1. Participant Engagement Rate

- Definition: Measures the level of active participation of beneficiaries in the program’s activities.

- Target: 80% participant engagement rate.

- Data Collection: Track attendance at training sessions, workshops, webinars, and other program activities. Use participation logs or event tracking tools.

- Analysis: Compare the number of participants actively engaging with the total number of enrolled participants. A high engagement rate indicates interest and involvement, while a low rate may suggest barriers or disinterest that need to be addressed.

Example Data:

Activity Participants Attended Total Registered Engagement Rate Training Session 1 120 150 80% Virtual Workshop 95 120 79% Job Placement Assistance 60 80 75%

2. Program Completion Rate

- Definition: The percentage of participants who complete the program or specific program phases (e.g., training, workshops, assessments).

- Target: 85% program completion rate.

- Data Collection: Track participants who have successfully finished all required components of the program (e.g., training sessions, assignments, tests).

- Analysis: A high completion rate signals that the program is engaging and accessible, while a low rate could indicate issues with program content, scheduling, or participant support.

Example Data:

Module/Phase Participants Completed Total Enrolled Completion Rate Initial Training Modules 100 120 83% Job Placement Follow-Up 75 100 75% Final Certification 90 100 90%

3. Job Placement Rate

- Definition: The percentage of program participants who successfully secure employment or job placements after completing the program.

- Target: 75% job placement rate.

- Data Collection: Track the number of participants who secure employment through direct follow-up, employer feedback, or job placement services.

- Analysis: A high job placement rate reflects program effectiveness in preparing participants for the job market, while a low rate may indicate that additional support is needed for job search or skills development.

Example Data:

Participant Group Placed in Jobs Total Participants Job Placement Rate Technical Skills Program 60 80 75% Soft Skills Training 40 50 80% Total Program Placement 100 130 77%

4. Beneficiary Satisfaction Rate

- Definition: The level of satisfaction among program participants regarding the quality and outcomes of the program.

- Target: 85% satisfaction rate.

- Data Collection: Collect feedback through surveys, focus groups, or one-on-one interviews. Use Likert scales or qualitative responses to gauge satisfaction.

- Analysis: A high satisfaction rate indicates that the program is meeting participant expectations, while a low rate can help identify specific aspects of the program needing improvement (e.g., content, delivery methods, facilities).

Example Data:

Survey Question Strongly Agree Agree Neutral Disagree Strongly Disagree Average Satisfaction Score “The program content met my expectations.” 50% 30% 10% 5% 5% 4.2/5 “The trainers were knowledgeable and helpful.” 55% 35% 5% 3% 2% 4.5/5 “I feel confident in applying the skills I learned.” 60% 30% 5% 3% 2% 4.6/5

5. Financial Efficiency

- Definition: Measures the financial health of the program, ensuring it stays within budget while achieving its objectives.

- Target: Keep program expenses within 95% of the allocated budget.

- Data Collection: Track all program expenses (e.g., staff costs, materials, logistics) against the allocated budget.

- Analysis: A well-managed program will operate within or under budget. Variances need to be investigated to ensure resources are being utilized efficiently.

Example Data:

Budget Category Allocated Budget Actual Spending Variance Staff Salaries $50,000 $48,000 -$2,000 Training Materials $10,000 $9,500 -$500 Travel and Logistics $5,000 $6,000 +$1,000 Total Program Budget $65,000 $63,500 -$1,500

6. Training Delivery Success

- Definition: Measures the success of training delivery in terms of participant understanding, engagement, and completion.

- Target: 90% of participants complete the training with a minimum passing score.

- Data Collection: Collect data from training assessments (e.g., tests, quizzes) and track participant progress throughout training.

- Analysis: The completion rate and test scores indicate how effectively the training materials were delivered and absorbed by participants.

Example Data:

Training Module Participants Completed Participants Passed Passing Rate Introduction to Digital Skills 100 95 95% Job Interview Preparation 80 70 87% Communication Skills 90 85 94%

7. Retention Rate

- Definition: The percentage of participants who continue in the program until completion, reflecting program engagement and participant satisfaction.

- Target: 85% retention rate.

- Data Collection: Track the number of participants who start the program and complete it, noting any dropouts or interruptions.

- Analysis: A high retention rate suggests that the program maintains participant interest and addresses any issues promptly.

Example Data:

Program Phase Participants Started Participants Completed Retention Rate Initial Enrollment 150 140 93% Mid-Program Training 140 125 89% Final Certification 125 110 88%

8. Outcome Impact (e.g., Skills Acquisition, Employment Success)

- Definition: Measures the direct impact of the program on participants’ skills, employability, or other outcome-based objectives.

- Target: 80% of participants report improved skills or employment.

- Data Collection: Surveys, interviews, or follow-up studies with participants to assess the skills gained or job placements secured after the program.

- Analysis: Impact measurements show the real-world effectiveness of the program in achieving its stated outcomes, such as improving employability or personal development.

Example Data:

Outcome Target Achieved Measured Impact Comments Improved Digital Skills 80% 85% Most participants felt they gained valuable digital skills Secured Employment Post-Training 75% 70% Lower due to slower job market recovery after training completion

These SayPro KPIs and Performance Indicators provide measurable insights into various aspects of program performance, from participant engagement to financial health. Tracking these metrics helps program managers adjust their strategies and improve outcomes over time.

-

SayPro Program progress reports

SayPro Program Progress Report Template

Purpose: This report provides a comprehensive overview of a program’s progress, tracking its alignment with set goals, milestones, financial targets, and key performance indicators (KPIs). It also highlights any challenges encountered and any corrective actions taken.

Program Name:

[Insert Program Name]

Reporting Period:

[Insert Start Date] – [Insert End Date]

Date of Report:

[Insert Date]

Prepared By:

[Insert Name/Title]

1. Executive Summary

- Overview of Program:

[Provide a brief summary of the program, including its main objectives, activities, and target audience.] - Key Highlights:

[Briefly summarize key achievements during the reporting period, such as completed milestones, successes, or positive feedback.] - Challenges Encountered:

[Mention any major issues faced, such as delays, resource limitations, or external factors impacting the program.]

2. Progress Against Program Objectives

Objective Target Achieved Status Comments Example: Increase job placement rate 75% job placement 70% job placement On Track Slightly behind target due to delayed placements Example: Deliver 5 training modules 5 modules delivered 5 modules delivered Achieved All training modules completed on time Example: Improve participant retention 80% retention rate 85% retention rate Exceeded Higher retention rate due to engagement strategies

3. Financial Summary

Budget Category Allocated Budget Spent Variance Comments Example: Program Staff $50,000 $45,000 -$5,000 Under budget due to delayed hiring Example: Training Materials $10,000 $9,000 -$1,000 Savings on material costs Example: Travel and Logistics $5,000 $6,500 +$1,500 Higher costs due to unexpected travel needs - Total Program Budget: [Insert total amount]

- Total Expenditure: [Insert total amount]

- Remaining Budget: [Insert amount]

4. Key Performance Indicators (KPIs)

KPI Target Value Actual Value Status Comments Example: Participant Satisfaction Rate 85% 88% Achieved Excellent feedback from participants Example: Program Completion Rate 90% 85% On Track Minor delays in training completion Example: Job Placement Rate 75% 70% Slightly Behind Delays in partner agreements impacted placement

5. Milestone Progress

Milestone Target Date Completion Date Status Comments Example: Initial Beneficiary Enrollment January 15, 2025 January 12, 2025 Completed Ahead of schedule Example: Mid-Program Review February 28, 2025 [Insert Date] In Progress Review meeting scheduled

6. Challenges and Issues

- Challenge 1:

[Describe the first major challenge, its impact, and how it is being addressed.]

Example: Low participant enrollment due to limited outreach in remote areas. We are addressing this by increasing local outreach and collaborating with community organizations. - Challenge 2:

[Describe the second challenge, its impact, and how it is being addressed.]

Example: Delays in training module delivery caused by unexpected travel restrictions. We have adapted by offering virtual training options.

7. Corrective Actions Taken

- Action 1:

[Describe the first corrective action taken to address challenges.]

Example: Expanded outreach efforts by utilizing local community networks and social media platforms to engage potential participants. - Action 2:

[Describe the second corrective action taken.]

Example: Transitioned in-person training to online sessions, ensuring continuity of the program despite travel restrictions.

8. Next Steps and Recommendations

- Next Steps:

[Outline the immediate actions or steps to be taken in the coming month, such as upcoming milestones, meetings, or adjustments to the program.]

Example: Prepare for the final phase of the program, including job placement follow-ups and closing evaluations. - Recommendations:

[Provide any suggestions for adjustments or improvements to the program moving forward.]

Example: Increase virtual engagement activities to maintain high participant involvement, especially in remote areas.

9. Conclusion

- Summary:

[Briefly summarize the program’s status, including key achievements and areas requiring attention. Provide a clear view of where the program stands at the end of the reporting period.]

10. Attachments (If Applicable)

- Attachment 1: Participant Satisfaction Survey Results

- Attachment 2: Detailed Financial Report

- Attachment 3: KPIs Dashboard

- Attachment 4: Survey Data/Progress Charts

Sign-Off

Program Manager:

[Insert Name]

[Signature]

[Date]Team Lead(s):

[Insert Name(s)]

[Signature(s)]

[Date]

This SayPro Program Progress Report template provides a structured format for tracking and reporting the performance, financial health, and outcomes of a program. By including key milestones, challenges, corrective actions, and recommendations, it ensures that program stakeholders have a clear view of the progress and any areas requiring attention.

- Overview of Program:

-

SayPro Ensure the report is well-organized and presents key findings in a digestible format, with charts, graphs, and other visuals as necessary

Effectiveness Analysis and Continuous Improvement Report

1. Introduction

This report evaluates organizational effectiveness by assessing key performance indicators (KPIs), ensuring continuous improvement, leveraging data-driven decision-making, and maintaining accountability and transparency.

2. Effectiveness Analysis

2.1 Key Performance Indicators (KPIs)

- Productivity rates

- Project completion time

- Customer satisfaction scores

- Financial performance

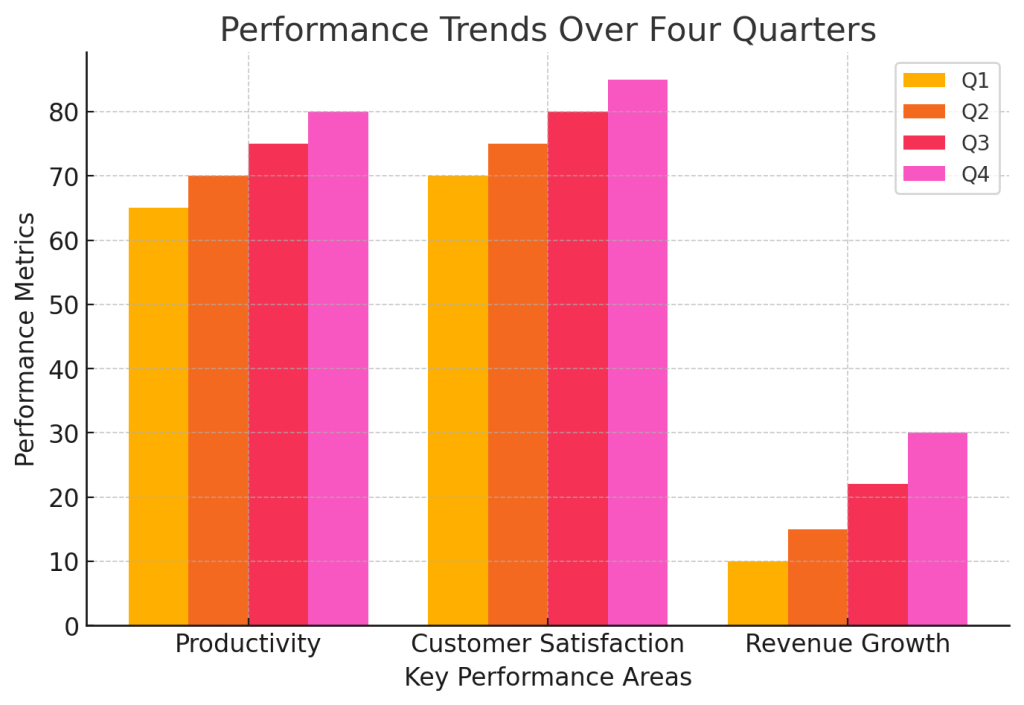

2.2 Bar Graph: Effectiveness Metrics Over Time

3. Ensuring Continuous Improvement

3.1 Strategies for Continuous Improvement

- Regular feedback loops

- Employee training programs

- Process optimization initiatives

- Innovation and technology integration

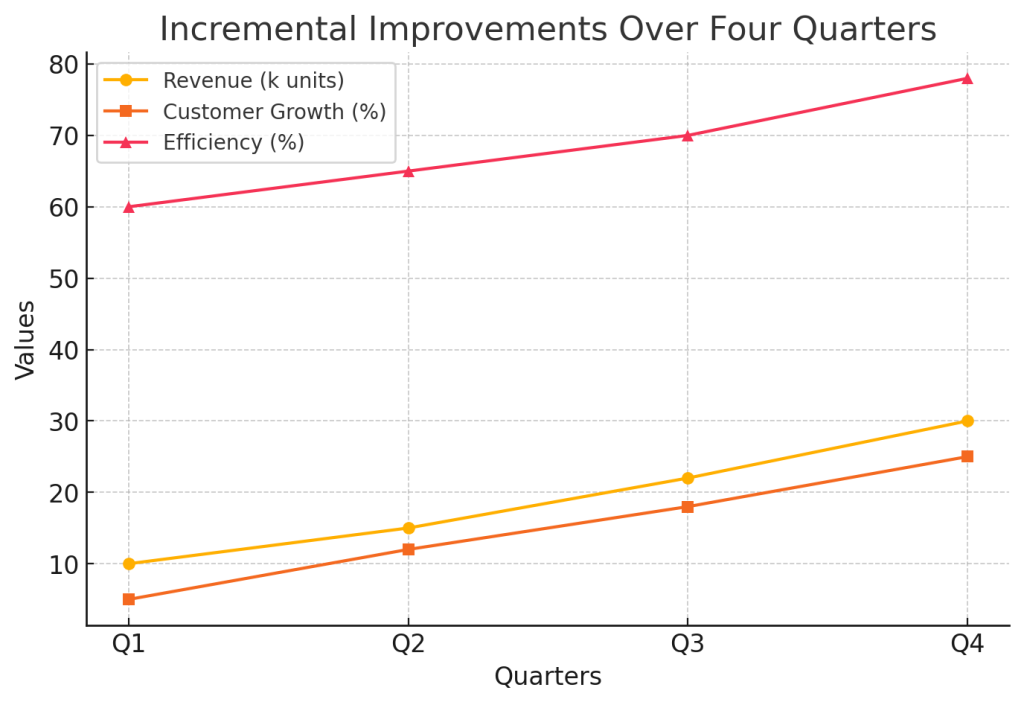

3.2 Line Graph: Improvement Trends Over Five Years

4. Data-Driven Decision-Making

4.1 Importance of Data Utilization

- Enhanced accuracy in strategic planning

- Predictive analytics for risk management

- Improved resource allocation

4.2 Pie Chart: Data Utilization in Decision-Making

(A pie chart illustrating the percentage of decisions based on historical data, predictive analytics, and market trends.)

5. Accountability and Transparency

5.1 Measures to Enhance Accountability

- Clear role definitions

- Performance tracking tools

- Regular audits

5.2 Transparency Framework

- Open data sharing policies

- Public performance reports

- Stakeholder engagement initiatives

5.3 Table: Transparency Initiatives and Their Impact

Initiative Impact Open reports Increased trust Audit system Improved compliance Stakeholder meetings Better decision alignment 6. Conclusion

This report highlights the importance of a structured approach to measuring effectiveness, fostering continuous improvement, leveraging data for decisions, and ensuring accountability. Implementing these strategies will drive long-term organizational success.

-

SayPro Quarterly Targets: Each project or program should have clear, measurable goals

SayPro Quarterly Targets Template

Purpose: This document outlines the specific, measurable, and time-bound goals for each project or program for the quarter. It ensures alignment with broader organizational objectives and provides clear milestones, financial targets, and outcome-based objectives to track progress.

Program Name:

[Insert Program Name]

Quarter:

[Insert Quarter and Year, e.g., Q1 2025]

Date:

[Insert Date]

Prepared By:

[Insert Name/Title]

1. Program Overview

- Program Description:

[Provide a brief summary of the program’s purpose, target audience, and key activities.] - Key Stakeholders:

[List major stakeholders, such as project teams, partners, beneficiaries, and funders.]

2. Quarterly Goals and Milestones

A. Milestones (Specific Key Dates/Deliverables)

Milestone/Objective Target Date Responsible Party Status (To Be Filled) Progress Notes (To Be Filled) Example: Conduct Initial Training Session March 15, 2025 Program Manager Pending Example: Complete Beneficiary Enrollment March 30, 2025 Enrollment Coordinator Pending Example: Submit Quarterly Report April 5, 2025 Monitoring & Evaluation Team Pending B. Financial Targets

Target Description Target Amount Responsible Party Status (To Be Filled) Progress Notes (To Be Filled) Example: Budget Allocation for Q1 $100,000 Finance Department Pending Example: Secure Additional Funding $25,000 Fundraising Team Pending Example: Program Expenses (Staff, Travel, etc.) $40,000 Program Manager Pending C. Outcome-Based Objectives

Objective Target Measurement Method Responsible Party Status (To Be Filled) Progress Notes (To Be Filled) Example: Increase Participant Engagement 80% engagement rate Survey & Participation Data Program Coordinator Pending Example: Job Placement Success Rate 75% placement rate Tracking System & Surveys Employment Support Team Pending Example: Improve Training Satisfaction 85% satisfaction rate Post-training Surveys Training Manager Pending

3. Key Performance Indicators (KPIs)

KPI Target Value Responsible Party Measurement Method Status (To Be Filled) Progress Notes (To Be Filled) Example: Participant Retention Rate 90% Program Coordinator Monthly Retention Tracking Pending Example: Completion Rate for Training 85% Training Department Course Completion Data Pending Example: Beneficiary Satisfaction Rate 80% Monitoring & Evaluation Team Feedback Surveys Pending

4. Risks and Mitigation Strategies

Risk Impact Mitigation Strategy Responsible Party Status (To Be Filled) Progress Notes (To Be Filled) Example: Low Participant Enrollment High Increase outreach through social media and community engagement Enrollment Team Pending Example: Delays in Training Delivery Medium Hire additional trainers and adjust timeline Program Manager Pending

5. Financial Summary (Quarterly)

- Total Budget for Quarter:

[Insert amount] - Estimated Costs:

[Insert breakdown of major expenses (e.g., staff, training, materials)] - Funding Sources:

[Insert details on funding sources, e.g., internal funds, grants, partnerships] - Projected Revenue (if applicable):

[Insert revenue forecast, if relevant to the program]

6. Evaluation and Monitoring

- Progress Review Timeline:

[Insert dates for key progress reviews throughout the quarter (e.g., mid-quarter review on March 15, 2025, end-of-quarter review on April 5, 2025).] - Monitoring Methods:

[Describe how progress will be monitored (e.g., monthly reports, tracking systems, regular team check-ins).] - Evaluation Criteria:

[List key factors that will determine the success of the program at the end of the quarter, such as achievement of objectives, financial health, stakeholder feedback, and other KPIs.]

7. Conclusion

- Summary:

[Provide a summary of the targets and expectations for the quarter. This should align the team’s efforts and provide clarity on what needs to be accomplished.] - Next Steps:

[Outline any immediate actions to be taken, including team assignments or important upcoming dates.]

Sign-Off

Program Manager:

[Insert Name]

[Signature]

[Date]Team Lead(s):

[Insert Name(s)]

[Signature(s)]

[Date]

This SayPro Quarterly Targets Template provides a structured approach for setting clear and measurable goals for each program or project. By defining milestones, financial objectives, and outcome-based targets, it ensures that all stakeholders are aligned and that progress can be monitored effectively throughout the quarter.

- Program Description:

-

SayPro Monthly Evaluation Summary Template: A summary document that consolidates the evaluation findings.

SayPro Monthly Evaluation Summary Template

Purpose: This template is designed to provide a consolidated summary of the evaluation findings, analysis, and recommendations for a given month. It helps stakeholders quickly understand program performance, identify areas for improvement, and determine necessary actions moving forward.

Program Name:

[Insert Program Name]

Evaluation Period:

[Insert Month/Year]

Date of Report:

[Insert Date]

Prepared By:

[Insert Name/Title]

1. Executive Summary

- Overview:

[Provide a brief summary of the program, including its objectives, key activities, and target audience for the month.] - Key Findings:

[Summarize the most important evaluation findings from the month. This can include successes, challenges, and any notable outcomes or trends.] - Impact Summary:

[Provide a quick overview of the impact achieved during the month. For example, improvements in skills, job placements, or other program goals.]

2. Program Performance

- Objectives:

[Restate the primary program goals for the month.] - Progress Against Objectives:

[Provide a summary of how the program performed relative to its objectives. Include key performance metrics, such as participant enrollment, completion rates, job placements, and skill development.] Example:- Goal 1: Improve job placement rate:

- Actual: 75% placement rate (Goal: 70%)

- Status: Exceeded

- Goal 2: Deliver training modules on time:

- Actual: 90% of modules completed on schedule

- Status: On Track

- Goal 1: Improve job placement rate:

- Performance Indicators:

[Summarize key performance indicators (KPIs) and their status for the month.] Example:- Participant Retention Rate: 85%

- Satisfaction Rate: 90% (from stakeholder feedback surveys)

- Completion Rate: 80%

3. Analysis of Results

- Successes:

[Provide an analysis of areas where the program exceeded expectations or had notable success. This could include high participant satisfaction, excellent feedback on certain activities, or positive outcomes in terms of employment or skill acquisition.] Example:- High Participant Satisfaction: 90% of participants reported being satisfied with the training modules, particularly in the areas of technical skills development.

- Challenges:

[Provide an analysis of challenges encountered during the month. This could include issues like low attendance, delays in training sessions, or difficulties in job placements.] Example:- Challenge 1: Low participation in virtual workshops due to connectivity issues.

- Challenge 2: Delays in onboarding new partners for job placement.

- Root Cause Analysis:

[Identify potential root causes for the challenges faced, and link them to any systemic or operational issues that need attention.] Example:- Root Cause for Low Participation: Inconsistent internet access in certain regions.

- Root Cause for Job Placement Delays: Lack of clarity in communication with partners about expected timelines.

4. Recommendations

- For Addressing Challenges:

[Provide specific recommendations to address the identified challenges from the month.] Example:- Challenge: Low participation in virtual workshops due to internet connectivity.

- Recommendation: Offer mobile-friendly versions of workshops and provide internet data allowances for participants in remote areas.

- Challenge: Low participation in virtual workshops due to internet connectivity.

- For Improving Program Outcomes:

[Provide specific suggestions for enhancing the program’s effectiveness and efficiency.] Example:- Recommendation: Enhance partner communication by setting clear expectations on job placement timelines and regularly checking in on progress.

- For Scaling Successes:

[Provide suggestions for leveraging the successes or positive aspects of the program for future growth.] Example:- Recommendation: Expand the use of interactive webinars and success stories in marketing materials, as they have proven effective in engaging participants.

5. Conclusion

- Summary of Key Insights:

[Provide a brief conclusion summarizing the key insights from the evaluation, focusing on performance, challenges, and next steps.] Example:- Overall, the program has made strong progress toward achieving its objectives, particularly in job placement and participant satisfaction. However, there are areas requiring attention, such as improving virtual workshop attendance and strengthening partner relationships to avoid delays.

- Next Steps:

[Outline the next steps or actions to be taken based on the findings and recommendations.] Example:- Follow up with internet service providers to explore data-sharing options for remote participants.

- Schedule a meeting with key partners to address job placement delays and set clear deadlines for upcoming months.

6. Attachments (If Applicable)

- Attachment 1: Survey Results

[Include any relevant data or survey results that support the findings.] - Attachment 2: Performance Metrics

[Include detailed charts, graphs, or tables showcasing key performance indicators.] - Attachment 3: Stakeholder Feedback

[Include a summary or complete set of feedback from stakeholders.]

Sign-Off

Program Manager:

[Insert Name]

[Signature]

[Date]Reviewer(s):

[Insert Name(s)]

[Signature(s)]

[Date]

This SayPro Monthly Evaluation Summary Template provides a streamlined format to capture essential insights, performance metrics, and actionable recommendations on a monthly basis. It ensures that all stakeholders have a clear understanding of the program’s progress and the steps needed to optimize future performance.

- Overview:

-

SayPro Stakeholder Feedback Form Template: A survey or questionnaire template used to gather input

SayPro Stakeholder Feedback Form Template

Purpose: This template is designed to collect valuable feedback from stakeholders and beneficiaries involved in the SayPro program. The feedback gathered will be used to assess program effectiveness, identify areas for improvement, and guide future program decisions.

Program Name:

[Insert Program Name]

Stakeholder Name (Optional):

[Insert Name]

Role/Position:

[Insert Role/Position]

Date:

[Insert Date]

Section 1: General Program Feedback

- How would you rate your overall experience with the program?

(Select one)- ☐ Very Satisfied

- ☐ Satisfied

- ☐ Neutral

- ☐ Dissatisfied

- ☐ Very Dissatisfied

- How well did the program meet your expectations?

(Select one)- ☐ Exceeded Expectations

- ☐ Met Expectations

- ☐ Below Expectations

- What aspects of the program did you find most beneficial?

(Check all that apply)- ☐ Training/Skills Development

- ☐ Networking Opportunities

- ☐ Job Placement Support

- ☐ Mentorship

- ☐ Resources Provided

- ☐ Other (Please specify): _______________

- What aspects of the program could be improved?

(Open-ended)

[Insert text box for response]

Section 2: Program Content and Delivery

- How would you rate the quality of the program content?

(Select one)- ☐ Excellent

- ☐ Good

- ☐ Average

- ☐ Poor

- How relevant was the program content to your needs?

(Select one)- ☐ Very Relevant

- ☐ Somewhat Relevant

- ☐ Not Relevant

- Was the program delivered in a way that was easy to understand and engage with?

(Select one)- ☐ Yes, very clear

- ☐ Mostly clear, with a few challenges

- ☐ No, it was difficult to understand

- How satisfied were you with the mode of delivery (e.g., in-person, virtual, hybrid)?

(Select one)- ☐ Very Satisfied

- ☐ Satisfied

- ☐ Neutral

- ☐ Dissatisfied

- ☐ Very Dissatisfied

Section 3: Support and Resources

- How would you rate the support provided by program staff and facilitators?

(Select one)- ☐ Excellent

- ☐ Good

- ☐ Average

- ☐ Poor

- Did you feel adequately supported throughout the program?

(Select one)- ☐ Yes, fully supported

- ☐ Somewhat supported

- ☐ Not supported

- How useful were the resources (e.g., materials, tools, guides) provided during the program?

(Select one)- ☐ Very Useful

- ☐ Somewhat Useful

- ☐ Not Useful

Section 4: Outcomes and Impact

- Have you seen improvements in your skills or career as a result of participating in the program?

(Select one)- ☐ Yes, significant improvement

- ☐ Some improvement

- ☐ No improvement

- Do you feel more confident in your ability to apply the skills learned in the program?

(Select one)- ☐ Very Confident

- ☐ Confident

- ☐ Not Confident

- Have you been able to secure employment or advancement opportunities as a result of the program?

(Select one)- ☐ Yes

- ☐ No

- ☐ In progress

- What additional support or resources would have helped you achieve more through the program?

(Open-ended)

[Insert text box for response]

Section 5: Final Comments and Suggestions

- What did you like most about the program?

(Open-ended)

[Insert text box for response] - What suggestions do you have for improving the program?

(Open-ended)

[Insert text box for response] - Any other comments or feedback you would like to share?

(Open-ended)

[Insert text box for response]

Section 6: Consent for Further Contact

- Would you be willing to participate in follow-up interviews or surveys to provide more detailed feedback?

(Select one)- ☐ Yes

- ☐ No

- Would you be open to providing a testimonial or sharing your success story for future program promotions?

(Select one)- ☐ Yes

- ☐ No

Thank you for your feedback!

Your input is valuable and will help us improve future program offerings. We appreciate your time and contributions.

This SayPro Stakeholder Feedback Form Template is designed to gather essential feedback from stakeholders and beneficiaries, helping SayPro to continuously assess program effectiveness, identify areas for improvement, and make necessary adjustments. It combines both quantitative and qualitative questions to ensure a comprehensive view of stakeholder experiences.

- How would you rate your overall experience with the program?

-

SayPro Corrective Action Plan Template: A document that outlines the steps needed

SayPro Corrective Action Plan Template

Purpose: This document serves as a structured framework for addressing issues identified during program evaluations, reviews, or assessments. It outlines the corrective actions required to resolve specific problems, improve program performance, and ensure alignment with objectives.

Program Name:

[Insert Program Name]

Program Manager:

[Insert Name]

Date:

[Insert Date]

Review Period:

[Insert Start Date] to [Insert End Date]

1. Identified Issue(s)

- Issue 1: [Insert Issue Title]

- Description:

[Provide a detailed description of the issue, including how it was identified and its impact on the program.] - Impact on Program:

[Explain the consequences of this issue on program outcomes, efficiency, or participant experience.]

- Description:

- Issue 2: [Insert Issue Title]

- Description:

[Provide a detailed description of another issue.] - Impact on Program:

[Explain the impact of this issue.]

- Description:

[Repeat as necessary for other issues.]

2. Corrective Action Plan

Action for Issue 1: [Insert Issue Title]

- Objective:

[Describe the desired outcome or objective that the corrective action aims to achieve.] - Corrective Actions:

[List the specific steps that will be taken to resolve this issue.]- Step 1: [Describe the first step, including any required resources or personnel.]

- Step 2: [Describe the second step, including any required resources or personnel.]

- Step 3: [Describe any additional steps.]

- Responsible Party:

[Insert name or team responsible for implementing the corrective action.] - Timeline:

[Insert start and end dates for each action.] - Expected Outcome:

[Describe the expected results after implementing the corrective action.]

Action for Issue 2: [Insert Issue Title]

- Objective:

[Describe the desired outcome or objective that the corrective action aims to achieve.] - Corrective Actions:

[List the specific steps that will be taken to resolve this issue.]- Step 1: [Describe the first step.]

- Step 2: [Describe the second step.]

- Step 3: [Describe any additional steps.]

- Responsible Party:

[Insert name or team responsible for implementing the corrective action.] - Timeline:

[Insert start and end dates for each action.] - Expected Outcome:

[Describe the expected results after implementing the corrective action.]

[Repeat as necessary for other issues.]

3. Monitoring and Evaluation

- Monitoring Mechanism:

[Describe how the implementation of the corrective actions will be monitored, including any progress tracking methods such as regular meetings, reports, or key performance indicators (KPIs).] - Evaluation of Effectiveness:

[Explain how the effectiveness of the corrective actions will be evaluated. This could include specific evaluation metrics, feedback from stakeholders, or follow-up assessments.]

4. Resources Needed

- Personnel:

[List any additional staff, trainers, or experts needed to implement the corrective actions.] - Budget:

[Provide a rough estimate of any financial resources needed to support the corrective actions, such as additional training costs, technology upgrades, or administrative support.] - Other Resources:

[List any other resources required, such as external partnerships, tools, or technology.]

5. Risk Assessment and Mitigation

- Potential Risks:

[Identify potential risks or obstacles to successfully implementing the corrective actions, such as resistance from staff or budget constraints.] - Mitigation Strategies:

[Describe the strategies that will be put in place to minimize or address these risks.]

6. Conclusion

- Summary of Actions:

[Provide a brief summary of the corrective actions being taken and their expected impact on program performance.] - Next Steps:

[Outline the next steps following the corrective action implementation, including any follow-up meetings, check-ins, or reporting requirements.]

Sign-Off

Program Manager:

[Insert Name]

[Signature]

[Date]Responsible Party(ies):

[Insert Name(s)]

[Signature(s)]

[Date]

This SayPro Corrective Action Plan Template provides a systematic approach to addressing issues identified during program evaluations or reviews. It ensures that the program is aligned with its goals and can effectively resolve challenges to improve performance and achieve desired outcomes.

- Issue 1: [Insert Issue Title]

-

SayPro Impact Evaluation Report Template: A standardized format to assess the outcomes

SayPro Impact Evaluation Report Template

Purpose: The purpose of this template is to standardize the format used to evaluate the impact and effectiveness of each SayPro program. It captures the program’s outcomes, performance metrics, analysis, and insights for continuous improvement.

Program Name:

[Insert Program Name]

Program Manager:

[Insert Name]

Review Period:

[Insert Start Date] to [Insert End Date]

Date of Report:

[Insert Date]

Prepared By:

[Insert Name/Title]

1. Executive Summary

- Program Overview:

[Provide a brief description of the program, its objectives, and the target audience.] - Key Findings:

[Summarize the most important evaluation findings, including the program’s successes, challenges, and key recommendations for improvement.] - Impact on Participants:

[Provide a high-level overview of the program’s impact on participants, such as job placements, skill development, or other outcomes.]

2. Program Objectives

- Primary Goal(s):

[State the primary goals of the program and the specific outcomes expected.] - Secondary Goal(s):

[List any secondary goals or sub-objectives.]

3. Evaluation Methodology

- Data Collection Methods:

[Describe the methods used to gather data for the evaluation, such as surveys, interviews, focus groups, participant feedback, etc.] - Sample Size and Demographics:

[Provide details about the participants or stakeholders involved in the evaluation (e.g., number of participants, demographic breakdown, etc.)] - Performance Metrics:

[List the key performance indicators (KPIs) used to assess the program’s success. Examples: participant retention rates, job placement rates, skill improvement, satisfaction levels.]

4. Impact Analysis

- Key Performance Metrics:

- Participant Enrollment:

[Insert number of participants enrolled in the program.] - Completion Rate:

[Insert percentage of participants who completed the program.] - Job Placement Rate:

[Insert percentage of participants who secured employment post-program.] - Skill Development:

[Insert assessment of participants’ skills gained, such as communication, technical skills, job readiness.] - Satisfaction Score:

[Insert average participant satisfaction score, if applicable.]

- Participant Enrollment:

- Program Outcomes:

[Provide an analysis of the program’s outcomes based on the data collected. Discuss how the program met or fell short of its objectives.]- Example: “80% of participants reported improved job readiness, with 60% securing employment within 3 months of program completion.”

- Long-Term Impact:

[Describe the long-term impact the program has had on participants or the community, such as career advancement, income growth, or professional networking.]

5. Successes and Achievements

- Achievement 1:

[Describe a major success or accomplishment of the program, such as the successful job placement of a large number of participants or positive feedback from employers.] - Achievement 2:

[Describe another success, such as an innovative initiative that was implemented or a particularly high-impact partnership formed.]

[Repeat as necessary for other achievements.]

6. Challenges and Areas for Improvement

- Challenge 1:

[Describe a key challenge encountered during the program, such as low participation, technology issues, or barriers to employment for participants.]- Impact: [Explain the impact of this challenge on the program’s outcomes.]

- Recommended Solution: [Provide suggestions for addressing this challenge in future iterations of the program.]

- Challenge 2:

[Describe another challenge.]- Impact: [Explain the impact.]

- Recommended Solution: [Provide suggestions for improvement.]

[Repeat as necessary for other challenges.]

7. Stakeholder Feedback

- Beneficiaries/Participants:

[Summarize feedback gathered from program participants regarding their experience, including strengths, weaknesses, and suggestions for improvement.] - Employers/Partners:

[Summarize feedback from employers or program partners on the effectiveness of the program, particularly in relation to job placements, skills, and candidate performance.] - Staff and Internal Stakeholders:

[Summarize feedback from program staff or internal teams regarding program delivery, resources, and any internal challenges faced.]

8. Recommendations for Future Program Iterations

- Recommendation 1:

[Provide a recommendation for improvement or change, based on the evaluation results. This could be related to curriculum changes, participant support, or partnerships.] - Recommendation 2:

[Provide another recommendation.]

[Repeat as necessary.]

9. Conclusion

- Summary of Findings:

[Provide a concise summary of the key findings of the evaluation.] - Final Thoughts:

[Offer concluding remarks on the program’s overall success and areas for continued development.]

10. Appendices (If Applicable)

- Appendix 1: Survey Results

[Include detailed survey results or other data that support the findings.] - Appendix 2: Interview Summaries

[Include summaries of key interviews with stakeholders.] - Appendix 3: Performance Data

[Include additional performance data, such as detailed metrics or visual graphs.]

Sign-Off

Program Manager:

[Insert Name]

[Signature]

[Date]Reviewer(s):

[Insert Name(s)]

[Signature(s)]

[Date]

This SayPro Impact Evaluation Report Template ensures a structured and standardized approach to evaluating program outcomes. By systematically capturing performance metrics, successes, challenges, and recommendations, this template enables comprehensive program assessments that drive continuous improvement and strategic decision-making.

- Program Overview:

-

SayPro Program Review Template: A structured form to capture progress

SayPro Program Review Template

Purpose: The purpose of this template is to capture and document the progress, challenges, and key outcomes of each program under SayPro. It serves as a tool for tracking performance, identifying areas for improvement, and ensuring accountability. This form can be used by program managers, team leaders, and other stakeholders involved in the review process.

Program Name:

[Insert Program Name]

Program Manager:

[Insert Name]

Review Period:

[Insert Start Date] to [Insert End Date]

Date of Review:

[Insert Date]

1. Program Overview

- Program Goal(s):

[Briefly describe the primary goals of the program.] - Target Audience:

[Describe the demographic or group the program is designed for.] - Key Activities/Deliverables:

[List the major activities or outputs of the program, such as training sessions, workshops, or events.]

2. Program Progress

- Milestones Achieved:

[List the key milestones reached during the review period.]- Example: Completed first round of training sessions for 100 participants.

- Example: Secured partnerships with 3 industry employers for job placements.

- Performance Indicators:

[Provide data on the key performance indicators (KPIs) being tracked. Include both qualitative and quantitative metrics, such as completion rates, job placements, and participant satisfaction.]- Example Metrics:

- Number of participants enrolled: [Insert Number]

- Job placement rate: [Insert Percentage]

- Participant satisfaction score: [Insert Rating]

- Number of training modules completed: [Insert Number]

- Example Metrics:

3. Challenges Encountered

- Issue 1: [Insert Issue Title]

- Description: [Provide a detailed description of the challenge.]

- Impact on Program: [Describe how this issue affected the program’s progress or outcomes.]

- Proposed Solution: [Suggest how this challenge can be addressed moving forward.]

- Issue 2: [Insert Issue Title]

- Description: [Provide a detailed description of the challenge.]

- Impact on Program: [Describe how this issue affected the program’s progress or outcomes.]

- Proposed Solution: [Suggest how this challenge can be addressed moving forward.]

[Repeat as necessary for other challenges.]

4. Key Achievements

- Achievement 1:

[Provide a brief description of a major achievement during the review period, such as successful job placements, completion of a significant milestone, or a positive feedback trend.] - Achievement 2:

[Describe another key success, such as securing new partners, high participant satisfaction rates, etc.]

[Repeat as necessary for other achievements.]

5. Financial Overview

- Program Budget:

[Provide the total budget for the program.] - Actual Spend:

[Describe the actual expenditure during the review period.] - Variance:

[Describe any budget discrepancies and the reasons for the variance.]

6. Stakeholder Feedback

- Beneficiaries/Participants:

[Summarize feedback from participants, including satisfaction levels, challenges they faced, and any suggestions for improvement.] - Partners/Employers:

[Summarize feedback from partners or employers regarding the program’s outcomes, such as job placements, candidate quality, and satisfaction.] - Staff and Program Teams:

[Summarize internal feedback regarding program execution, resources, and challenges faced.]

7. Areas for Improvement

- Improvement 1:

[Describe an area where the program can improve based on the challenges or feedback from stakeholders.] - Improvement 2:

[Describe another area for improvement, such as streamlining processes, enhancing training content, or addressing participant needs.]

[Repeat as necessary.]

8. Next Steps and Recommendations

- Action Plan for Improvement:

[List the specific actions to be taken to address the identified areas for improvement.] - Future Milestones:

[List any upcoming milestones for the next review period.] - Resources Needed:

[Describe any resources (budget, personnel, partnerships, etc.) needed to implement the improvements.]

9. Sign-Off

Program Manager:

[Insert Name]

[Signature]

[Date]Reviewing Stakeholder(s):

[Insert Name(s)]

[Signature(s)]

[Date]

This template ensures a comprehensive review of each program under SayPro, offering a structured approach to assess progress, identify challenges, and drive improvements. It helps stakeholders focus on the key elements of the program’s success and areas that require corrective action or refinement.

- Program Goal(s):